Tag: webmaster notes

Alternatives for Google Domains

Posted by Alex On November 9, 2023

How to reboot a server in DigitalOcean

Posted by Alex On November 15, 2022

Sitemap.xml files: what they are for, how to use them, and how to bypass “Too many URLs” error and size limits

Posted by Alex On October 20, 2022

How to prevent search engines from indexing only the main page of the site

Posted by Alex On September 1, 2022

iThemes Security locked out a user – how to login to WordPress admin when user is banned (SOLVED)

Posted by Alex On July 11, 2022

WordPress error “Another update is currently in progress” (SOLVED)

Posted by Alex On January 26, 2022

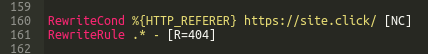

How to block access to my site from a specific bux site or any other site with negative traffic

Posted by Alex On August 21, 2021

How to protect my website from bots

Posted by Alex On August 19, 2021

How to block by Referer, User Agent, URL, query string, IP and their combinations in mod_rewrite

Posted by Alex On May 28, 2021

How to prevent Tor users from viewing or commenting on a WordPress site

Posted by Alex On October 19, 2022